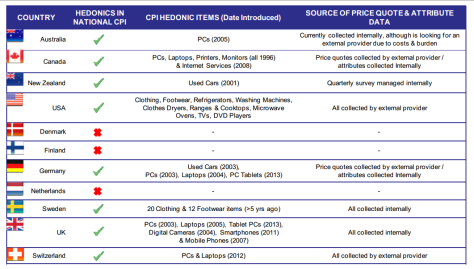

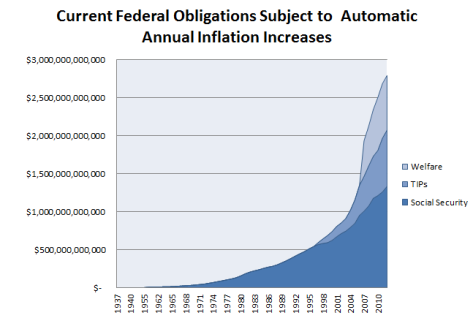

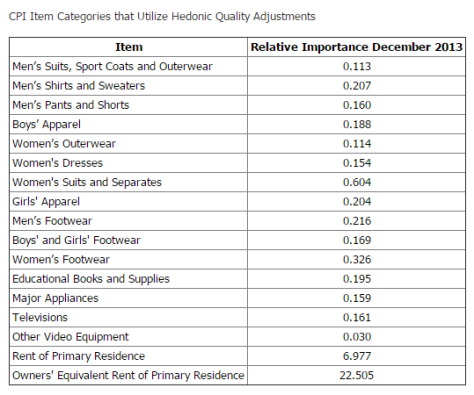

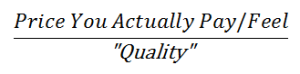

The BLS uses HQA to evaluate a new product using the pricing relationships that existed in the past to value what the new product would have cost in the past. Setting aside the myriad of fundamental arguments one could make against this process, lets look at a bonafide statistical pitfall that Hedonic Quality Regression (HQR) fails to address. At best this introduces a bias into the CPI, and at worst renders the prices used completely misleading.

HQR was introduced by economists to tackle a very real problem: products keep changing configuration with each new cycle. Very few products stay exactly the same throughout time; companies a constantly adding new features as technological boundaries are pushed to new limits.

However, technology has a tendency to create things we have never seen before. We can all find a point where a technology we take for granted today didn’t exist in the past. From the telegraph to the internet to the iPad, these were all completely new products at one point with little to no historical pricing data. This means whenever a product is released that is truly different, BLS economists have to use the HQR to extrapolate what the price of this unique object would have been had it existed in the past. If this sounds like nonsense, it is: statistically and intuitively.

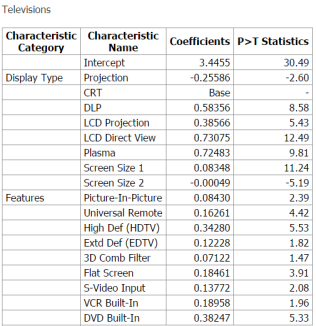

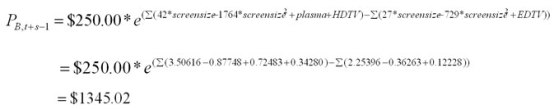

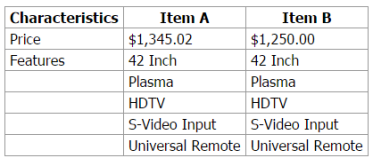

The statistical model that the BLS uses in its HQR’s is called Ordinary Least Squares (OLS), one of the simplest forms of linear regression and something covered in every econometrics 101 class. OLS is basically the children’s toy of statistical models; it is introduced to neonatal econometricians to get them used to how bigger and better models might work.

As you might expect, OLS has severe limitations, but today we will focus on one: the model’s complete failure to extrapolate. Intuitively this means that OLS works best when its presented with data that looks like data it has seen before. When an OLS model encounters data outside the bounds of its understanding, it yields completely nonsensical results.

Performing extrapolation relies strongly on the regression assumptions. The further the extrapolation goes outside the data, the more room there is for the model to fail due to differences between the assumptions and the sample data or the true values

– proverb

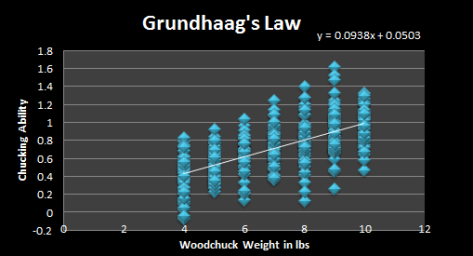

To provide an illustration of this effect, let’s consider a world in which the mythical wood chucking woodchuck is an economic reality: competitive wood chucking is big business drawing millions of viewers. The pioneering work of Dr Olaf Grundhaag established that their is a strong linear statistical relationship between woodchuck weights (which range between 4-10 lbs) and wood chucking ability as measured in board feet of wood. Now know as Grundhaag’s Law, the relationship can be summarized in the now famous graph:

As you might imagine, breeding champion chuckers has become very competitive business. One enterprising breeder retains the services of Korean cloning sensation Dr. Hwang Woo-Suk to splice grizzly bear DNA into a woodchuck embryo, which is gestated via implant by a trained circus bear named Bubbles.

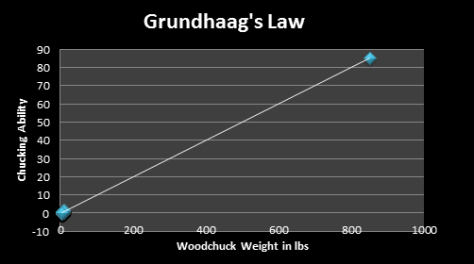

Bubbles gives birth to an enormous woodchuck with unknown wood chucking abilities. It reaches chucking maturity weighing in at 850 lbs. Rather than risk annihilation via wood hurled by his monster woodchuck, the breeder retains the services of Dr. Grundhaag to estimate the wood chucking prowess of the bear-chuck hybrid. This information will be used to build a custom training enclosure.

Knowing the limitations of OLS regression, Dr. Grundhaag faces a classic principal-agent problem: he knows that the predictive power of his model is suspect given the weight of the bear-chuck is so far out of the previous weight range for woodchucks (4-10 lbs). However, he doesn’t want to let easy money slip through his fingers or, worse yet, look stupid when he says his model can’t handle the task. So he steps up to the plate and plugs 850 lbs into his model:

Dr Grundhaag cautiously delivers his prediction to the breeder: 85 board feet of chucking ability (a decent sized tree)! The breeder is so excited he orders 5 more monster bearchucks. However, the breeder is shocked when the bearchuck tears his arm clear out of the socket trying to chuck a champion tree. What the hell went wrong? The breeder is ruined. Contemplating a mixture of revenge and suicide, the breeder phones Dr. Grundhaag at a conference and asks “Why did your prediction fail?”

Grundhaag’s model failed because of something called non-linearity. When woodchucks weigh between 4-10 lbs, the relationship between ability and weight looks linear. Adding 1 lb to your chuck always creates the same change in chucking ability. But adding over 800 lbs to your chuck is bound to create some instabilities.

This is a fact of nature — scale matters. If you invented an anti-shrink ray gun and scaled up an ant up to the size of a football stadium, it wouldn’t be able to lift 10 times its body weight… it might not even be able to lift itself. The structure of an ants body was made for its size. Look at the health problems faced by gigantic humans.

Its the same with giant bearchuck hybrids; they just can’t take the strain of slinging a redwood, as predicted by Grundhaag’s Law. Sure, they can chuck more wood than any woodchuck in the history of chucking, just not as much as a linear relationship would suggest extrapolated from a completely different range of input data.

Coming back to reality, when a manufacturer discontinues a product or introduces a completely new product to the marketplace, the BLS is doing the equivalent of applying Grundhaag’s Law. They extrapolating outside the range of data previously used to create the Hedonic Quality Regressions. The results are to be taken with big, fat granules of salt. In other words, the results cannot be trusted. Nor can they be verified, since the BLS keeps data such as this under lock and key.